I’ve been a trader and investor for 44 years. I left Wall Street long ago—-once I understood that their obsolete advice is designed to profit them, not you.

Today, my firm manages around $5 billion in ETFs, and I don’t answer to anybody. I tell the truth because trying to fool investors doesn’t help them, or me.

In Daily H.E.A.T. , I show you how to Hedge against disaster, find your Edge, exploit Asymmetric opportunities, and ride major Themes before Wall Street catches on.

I’m hosting a webinar entitled “Why Covered Call ETFs Suck and What to Do Instead” (More Info Below) December 9 2-3pm. Sign Up Here

Table of Contents

H.E.A.T.

For a long time I have used NVDA as an example of how to pick the obvious winner in a major theme. More and more, cracks are starting to form in the obvious part of that equation as competition and doubts start to emerge. Today we talk about some competition…..

Google vs. Nvidia: Who Actually Wins This AI War?

The market finally got the thing it’s been half‑expecting and half‑dismissing: a credible, production‑scale alternative to Nvidia inside a real AI product. Google’s Gemini 3 isn’t just another “whizzy chatbot.” It’s a flagship model that third‑party benchmarks say outperforms OpenAI’s latest on key reasoning tasks and was trained end‑to‑end on Google’s own TPUs, not Nvidia GPUs.

That’s the part that matters. Alphabet has gone from “maybe they missed AI” to “best‑performing Mag7 name,” up ~68% this year and pushing toward a $4 trillion market cap, with Broadcom, Celestica, and Lumentum screaming higher as the TPU supply chain gets repriced.

Nvidia, meanwhile, is still near peak revenue and earnings, but the narrative has cracked: its stock is down almost 10% this month and we just watched another “DeepSeek moment” where investors realized cheaper, more efficient AI is possible on non‑Nvidia hardware.

Under the hood, this isn’t just Google vs. Nvidia; it’s hyperscalers vs. merchant silicon. Google, Meta, OpenAI and others are racing to design their own accelerators and using Broadcom and similar partners to turn those designs into custom ASICs at scale.

Google’s Ironwood TPU is now on its seventh generation, optimized for high‑volume inference at lower cost and power than general‑purpose GPUs.

Meta is reportedly kicking the tires on buying billions of dollars’ worth of Google TPUs for its own data centers, a direct shot across Nvidia’s bow and even AMD’s, which has been courting Meta with its own GPUs.

If that deal happens, we’re no longer talking about “Google’s in‑house chip for Google workloads.” We’re talking about a second AI hardware platform, sold to third parties, with price/performance that can undercut GPU‑only clouds. That’s why Nvidia and AMD sold off on the headlines and why Oracle—sitting on billions of dollars of Nvidia GPUs it rents out—is suddenly in the crosshairs of every TPU slide deck.

Can Google actually “overtake” Nvidia?

In pure market‑cap terms, it’s already getting tight. After this latest move, the gap between Nvidia and Alphabet has shrunk to roughly half a trillion dollars, the smallest in months.

At current sizes, that’s basically one bad month for Nvidia and one good month for Google. If you force me to handicap it: I’d say there’s a better‑than‑even chance we see Google briefly trade above Nvidia in market cap sometime in the next 12–24 months, not because Nvidia “dies,” but because investors re‑rate the idea that one company gets all of the AI economics.

Where I’m much more conservative is on infrastructure dominance. Nvidia still owns the training stack at scale—CUDA, cuDNN, libraries, developer mindshare, and a roadmap of H‑class GPUs that everyone from hyperscalers to Chinese tech giants is still lining up to buy.

Google’s TPUs, and custom ASICs more broadly, are a much bigger threat to inference economics and pricing power than to Nvidia’s near‑term training volumes. The more Google proves you can run frontier‑class models on cheaper silicon, the more the whole ecosystem shifts from “pay whatever Nvidia asks” to “optimize relentlessly for cost per token.” That doesn’t kill Nvidia, but it does cap how crazy the margin story can get.

Winners, losers, and what it means for the AI stack

Likely Winners

Alphabet (GOOGL / GOOG) – Full‑stack AI (Gemini + Search + Cloud) and a credible hardware story. A stand‑alone TPU/DeepMind business is being valued by some analysts near $1 trillion, and the stock has already added ~$1.5 trillion in market cap this year.

Broadcom (AVGO) – The cleanest pure‑play on the custom‑ASIC wave. Google’s TPUs alone could be >$10B in annual revenue for Broadcom, and Jefferies has now crowned it their top semi pick over Nvidia on the back of that AI ASIC pipeline.

Google’s supply chain (CLS, LITE, others) – Celestica, Lumentum and peers are showing you in real time what happens when a new AI platform wins: big beat‑and‑raise quarters and double‑digit one‑day pops as hyperscalers ramp 800G/optical and accelerator boards.

Other custom‑silicon & networking names (MRVL, etc.) – Marvell and similar players ride the same structural trend: the hyperscalers are done letting one merchant vendor capture all the economics. ASIC + fat networking is now the playbook at every cloud.

Hyperscalers with real AI demand (MSFT, AMZN, META) – The more competition in hardware, the better life is for large buyers who can pit Nvidia, custom ASICs, and their own designs against each other while passing lower unit costs into more AI products.

Likely Losers (or at least “less obvious winners”)

Nvidia (NVDA) – Still the default training platform, but the “no alternative” narrative is dead. We’ve now seen:

DeepSeek run a serious model on cheaper hardware and wipe almost $600B off Nvidia in a day,

Google train a frontier model entirely on TPUs,

and hyperscalers openly exploring alternatives.

That doesn’t mean Nvidia can’t grow; it means the upside path involves more competition and less infinite pricing power than the most optimistic AI bros hoped.

AMD (AMD) – Caught in a tough spot: it’s trying to claw share from Nvidia in GPUs at the exact moment big buyers are shifting some workloads to ASICs. When Google/Meta news hits, AMD sells off alongside Nvidia, which tells you where the market thinks the incremental dollars are headed.

GPU‑heavy resellers (e.g., Oracle, some cloud resellers) – If your moat was “we bought a ton of Nvidia and we rent it out,” you now face the prospect of Google‑style AI clouds with lower cost per inference and Meta/OpenAI rolling their own accelerators with partners like Broadcom.

Anyone paying peak‑bubble multiples for AI with no real earnings – A cheaper‑compute world is great for users but rough for the weakest business models. If more value flows to platforms (Google, Microsoft, Amazon) and custom‑silicon enablers (Broadcom, Marvell) then the more speculative “AI wrappers” are the shock absorber.

So what are the “must‑owns” in this setup?

Not investment advice, but if I’m building an AI infrastructure basket for this new phase, my core has:

Alphabet (GOOGL / GOOG) – You get:

a top‑tier frontier model (Gemini 3),

the TPU stack and a growing chance of selling that silicon externally,

plus Search/YouTube/Ads as monetization rails.

It’s the clearest “winner of AI plus owner of the demand funnel.”

Nvidia (NVDA) – I don’t think you throw out the king just because the court got crowded. Training still needs insane amounts of general‑purpose compute, and every efficiency breakthrough so far has eventually translated into more AI workloads, not fewer. Nvidia will likely lose some pricing power over time, but if the AI TAM is durable, they can both grow and get derated a bit and still be fine.

Broadcom (AVGO) – This is the arms dealer for the custom‑silicon revolution: Google’s TPUs, OpenAI’s announced accelerators, other hyperscalers’ ASICs, plus high‑end networking. It’s the cleanest way to bet on “the future is specialized silicon,” without having to pick a single model winner.

Then around that core, I’d consider higher‑beta satellites sized appropriately:

Celestica (CLS) and Lumentum (LITE) as ways to express the “TPU + optical + 800G” build‑out.

Marvell (MRVL) for custom accelerators and data‑center networking across non‑Google hyperscalers.

One or two platform names (MSFT, AMZN, META) so you’re long AI usage broadly, not just one chip vendor.

The big picture: Google just proved that Nvidia isn’t the only way to do state‑of‑the‑art AI at scale. That’s bad for Nvidia’s myth, but not necessarily bad for Nvidia’s business if the opportunity stays as big as it looks. The real trade from here is less “pick one winner” and more “own the platforms and the plumbing that get paid no matter which logo is on the chip.”

News vs. Noise: What’s Moving Markets Today

In today’s News vs. Noise, let’s talk about the market’s new favorite fantasy: a third “insurance” cut in December. I’m not sure how much a December cut matters in the long run as a pause in December makes a cut in January almost certain. However, a lot of last week’s weakness was probably a re rating of the odds of December, so any Fed pivot is probably a good sign for those who want a Santa Claus rally.

It is simultaneously shocking and completely on‑brand that excitement has exploded again. The 12‑member FOMC is genuinely split. Miran, Waller, Williams, Jefferson (and likely Bowman) are openly comfortable taking rates lower, while Musalem, Schmid, Goolsbee, Collins, Barr – and, based on his recent comments, Powell himself – are leaning toward a hold. Governor Cook is stuck in the middle. Add in the new political buzzword of the month (“affordability crisis”) and you can see why the Fed is starting to look more like Congress than an independent technocratic body.

What makes this debate absurd is the macro backdrop. The Fed has already delivered two 25 bp “insurance” cuts into what’s likely to be a ~4% Q3 GDP print, with unemployment at 4.4% (still lower than ~¾ of all readings in the last 77 years) and core CPI running around 3% – a level we haven’t seen near target since 2021. On top of that, there are growing signs of froth and speculative behavior in private credit. Any Q4 weakness will be largely self‑inflicted via a government shutdown and policy whiplash. Against that, Powell’s allies (Williams, Daly) are now publicly laying the groundwork for a December move, arguing that a weakening labor market is the bigger risk and hinting at a “cut now, then hold” strategy. The fact that we’re even debating a third cut, with incomplete data and a still‑elevated inflation backdrop, is the real story: it shows how far the Fed has drifted toward accepting 3% inflation as the new normal.

Takeaways

The committee is fractured. December isn’t about “the data” as much as which faction Powell decides to side with – and how many dissents he’s willing to tolerate.

Markets are reacting to speeches, not stats. One dovish Williams speech in Chile was enough to flip December cut odds from ~40% to ~80% and rip equities higher. That’s pure narrative, not new information.

We’re already in insurance‑cut territory. Two cuts into ~4% GDP, 4.4% unemployment, and 3% core inflation is not “data dependent” – it’s regime change toward tolerating structurally higher inflation.

“Affordability crisis” = inflation fatigue. Politicians finally discovered that voters hate 3%+ inflation; the Fed is implicitly signaling they can live with it. That gap is where anger – and policy error – lives.

Cut vs. hold is a bad choice either way.

Cut in December: short‑term sugar high for risk assets, more damage to inflation credibility.

Hold until January: more market drama, but at least you’re reacting to real data instead of vibes.

Positioning lens: treat a December cut as already priced in risk assets. The real edge is in understanding the new reaction function: a Fed that quietly prefers protecting the labor market and asset prices over making a genuine run at 2% inflation.

A Stock I’m Watching

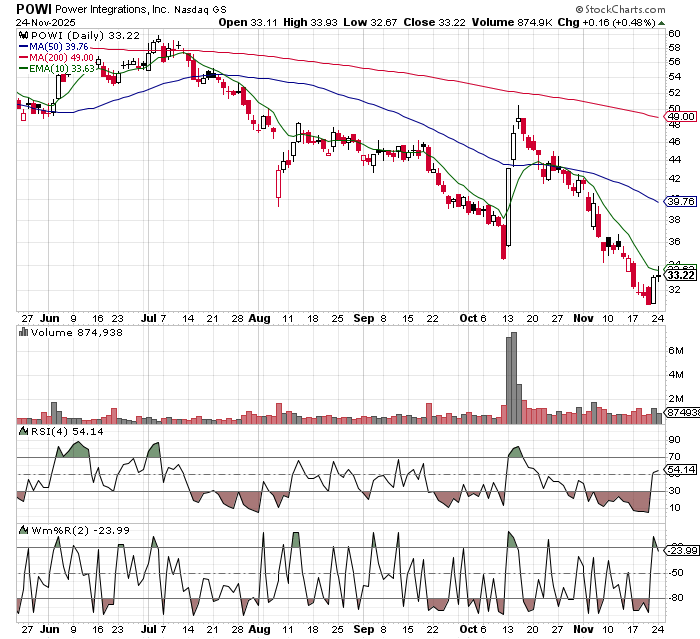

Today stock is Power Integrations (POWI)…..

POWI is an AI picks and shovels play with a reasonable base case valuation. Look for a potential undercut and rally at the 10 day moving average.

How Else I Can Help You Beat Wall Street at Its Own Game

Inside H.E.A.T. is our monthly webinar series, sign up for this month’s webinar below….

Why Covered Call ETFs Suck-And What To Do Instead

Tuesday December 9, 2-3PM EST |

Covered call ETFs are everywhere — and everyone thinks they’ve found a “safe” way to collect yield in a sideways market. |

The truth? |

They cap your upside, mislead investors with “yield” that’s really your own money coming back, and often trail just owning the stock by a mile. |

Join me for a brutally honest breakdown of how these funds actually work — and what you should be doing instead. |

What You’ll Learn:

🔥 Why “high yield” covered call ETFs are often just returning your own capital |

The H.E.A.T. (Hedge, Edge, Asymmetry and Theme) Formula is designed to empower investors to spot opportunities, think independently, make smarter (often contrarian) moves, and build real wealth.

The views and opinions expressed herein are those of the Chief Executive Officer and Portfolio Manager for Tuttle Capital Management (TCM) and are subject to change without notice. The data and information provided is derived from sources deemed to be reliable but we cannot guarantee its accuracy. Investing in securities is subject to risk including the possible loss of principal. Trade notifications are for informational purposes only. TCM offers fully transparent ETFs and provides trade information for all actively managed ETFs. TCM's statements are not an endorsement of any company or a recommendation to buy, sell or hold any security. Trade notification files are not provided until full trade execution at the end of a trading day. The time stamp of the email is the time of file upload and not necessarily the exact time of the trades. TCM is not a commodity trading advisor and content provided regarding commodity interests is for informational purposes only and should not be construed as a recommendation. Investment recommendations for any securities or product may be made only after a comprehensive suitability review of the investor’s financial situation.© 2025 Tuttle Capital Management, LLC (TCM). TCM is a SEC-Registered Investment Adviser. All rights reserved.